Orphaned No More: Adopting AWS Lambda

Background

HumanGov is a Model Automated Multi-Tenant Architecture (MAMA) that is meant to be used by the 50 states for personnel tracking. Currently the architecture is fully automated on AWS. The architecture is not ready for go-live. All actions so far are for a proof-of-concept that is presented to the Chief Technical Officer (CTO) for review.

During the weekly team meeting, Ko (User Experience Lead) reports that his team has performed thousands of tests against the infrastructure, with moves, adds, and changes, and is not finding any issues in the application user interface. Woo (Infrastructure Lead) reports that there are hundreds of 'orphaned' files in the S3 bucket. (These files are considered 'orphaned' because they do not have corresponding records in the DynamoDB table.) Kim (Tech Lead) reports that there is not a trigger to delete the file from the bucket when a user is deleted from the DynamoDB database. Brady (Lawyer) reports that not deleting the files that the government wants to delete would be a breach of the contract.

You first get the team to agree on the set of problems (and root cause):

Problem 1: There is not a trigger for when records are deleted from the DynamoDB table. (Dynamo DB streams is not enabled.)

Problem 2: Orphaned files in the S3 bucket. (Files are not deleted from S3 buckets when users are deleted from the database.)

Problem 3: Not meeting contractual obligation (Not deleting files when requested.)

After some thought, you propose a solution to (the reported issues):

Use Amazon DynamoDB Streams to trigger that an entry was deleted from the table. (Provide a trigger for when records are deleted from the table.)

Use AWS Lambda to cleanup S3 bucket per DynamoDB Stream. (Eliminate orphaned files.)

Use AWS Lambda to cleanup S3 bucket per DynamoDB Stream. (Meet contractual requirement.)

In this article, you will make sure that the files are orphaned no more, by adopting AWS Lambda.

Highlighting a couple of the technologies that will be used in this solution:

Amazon DynamoDB Stream

DynamoDB streams provides a time-ordered sequence of item-level modifications to a DynamoDB table. Once the stream is enabled, it capures information about every modification to data items inside the table. In the context of this solution, this feature will be leveraged to make sure that the S3 bucket is cleaned up when entries are deleted from the DynamoDB table. Note that entries in DynamoDB streams only persist for 24 hours.

AWS Lambda

AWS Lambda is used to run code without provisioning infrastructure. In the context of this solution, we are taking advantage of the fact that AWS Lambda can be triggered by other AWS services, such as DynamoDB.

AWS CloudWatch Log Group/Log Stream

A log stream is the sequence of logged events. A log group is simply a group of log streams with the same settings. We covered AWS CloudWatch in an earlier article, but not neccessarily Log Groups. In the context of this solution, the log stream/log group will be used to capture the logs of the Lambda function.

Prequisite 1 of 3. Elastic Kubernetes Service (EKS) | Cluster

Need a Kubernetes cluster named `humangov-cluster` with at least one HumanGov Application state deployed running on Amazon EKS.

If you need instructions for that, check the series on Kubernetes.

If you followed my example from the Kubernetes article, you probably have a few pieces to re-do. I'll list them here, so you have a check-list. Disclaimer: the information below is based on the context of having gone through the prior series. There are assumptions/pre-requisites for the information provided here, and if it doesn't work for you, refer to the prior series. For the sake of brevity, screenshots are not included here. Please see the prior series of articles if you want to see some images.

#1 of 15. IAM | eks-user Access keys

AWS Console -/- Identify and Access Management (IAM) -/- Access management -/- Users [eks-user]

[Security credentials]

[Create access key]

Access key best practices & alternatives -/- Other [Next]

Set description tag -optional [Create access key]

Retrieve access keys [Done]

#2 of 15. Cloud9 | Disable managed credentials

Preferences -/- AWS Settings -/- Credentials -/- DISABLE 'AWS managed temporary credentails'

#3 of 15. Cloud 9 | Authenticate with eks-user access key

export AWS_ACCESS_KEY_ID=XXXXXXXXXXXXXXXX

export AWS_SECRET_ACCESS_KEY=YYYYYYYYYYYYYYYYYYYYYYYYY

export AWS_ACCESS_KEY_ID=AKIAXKHBMWXLDMEVG5RA

export AWS_SECRET_ACCESS_KEY=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

AKIAXKHBMWXLDMEVG5RA

qOqx01wqGcueeLU8ZpaJQGVaScgkERNgGBsQjRyV

#4 of 15. Cloud9 | Create eks cluster [Warning: this step may take 15 minutes or so]

cd ~/environment/human-gov-infrastructure/terraform

eksctl create cluster --name humangov-cluster --region us-east-1 --nodegroup-name standard-workers --node-type t3.medium --nodes 1

#5 of 15. Cloud9 | Update local Kubernetes config

aws eks update-kubeconfig --name humangov-cluster --region us-east-1

#6 of 15. Cloud9 | Verify Cluster Connectivity

kubectl get svc

kubectl get nodes

#7 of 15. Cloud9 | Load Balancer

cd ~/environment

curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.5.4/docs/install/iam_policy.json

aws iam create-policy \

--policy-name AWSLoadBalancerControllerIAMPolicy \

--policy-document file://iam_policy.json

#8 of 15. Cloud9 | Associate IAM OIDC provider

eksctl utils associate-iam-oidc-provider --cluster humangov-cluster --approve

#9 of 15. Cloud9 | Create service account for load balancer.

eksctl create iamserviceaccount \

--cluster=humangov-cluster \

--namespace=kube-system \

--name=aws-load-balancer-controller \

--role-name AmazonEKSLoadBalancerControllerRole \

--attach-policy-arn=arn:aws:iam::502983865814:policy/AWSLoadBalancerControllerIAMPolicy \

--approve

#10 of 15. Cloud9 | Install load balancer controller

# Add eks-charts repository.

helm repo add eks https://aws.github.io/eks-charts

# Install

helm install aws-load-balancer-controller eks/aws-load-balancer-controller \

-n kube-system \

--set clusterName=humangov-cluster \

--set serviceAccount.create=false \

--set serviceAccount.name=aws-load-balancer-controller

#11 of 15. Cloud9 | Verify controller installation

kubectl get deployment -n kube-system aws-load-balancer-controller

#12 of 15. Cloud9 | Create role and service account for cluster to S3 and DynamoDB tables

eksctl create iamserviceaccount \

--cluster=humangov-cluster \

--name=humangov-pod-execution-role \

--role-name HumanGovPodExecutionRole \

--attach-policy-arn=arn:aws:iam::aws:policy/AmazonS3FullAccess \

--attach-policy-arn=arn:aws:iam::aws:policy/AmazonDynamoDBFullAccess \

--region us-east-1 \

--approve

#13 of 15. Cloud9 | Deploy the states

cd ~/environment/human-gov-application/src

kubectl get pods

kubectl apply -f humangov-california.yaml

kubectl apply -f humangov-florida.yaml

kubectl apply -f humangov-staging.yaml

kubectl get pods

kubectl get svc

kubectl get deployment

#14 of 15. Cloud9 | Ingress

kubectl apply -f humangov-ingress-all.yaml

kubectl get ingress

#15 of 15. Route 53 | Double-check DNS

Make sure the A records for california.humangov-ll3.click and florida.humangov-ll3.click point to the new load balancer you just created.

Access the web pages, and confirm they're operational.

Prerequisite 2 of 3. DynamoDB | Table and Streams

A table named humangov-california-dynamodb. DynamoDB streams must be enabled on this table. If you followed the previous deployment labs in this series, then you already have this table.

DynamoDB

Tables -/- humangov-california-dynamodb -/- [Exports and streams]

DynamoDB Stream details -/- [Turn on]

NEW_AND_OLD_IMAGES -/- [Turn on stream]

[Save]

Prerequisite 3 of 3. S3 Bucket

Just have an existing S3 bucket. If you followed the previous deployment labs in this series, then you already have an S3 bucket.

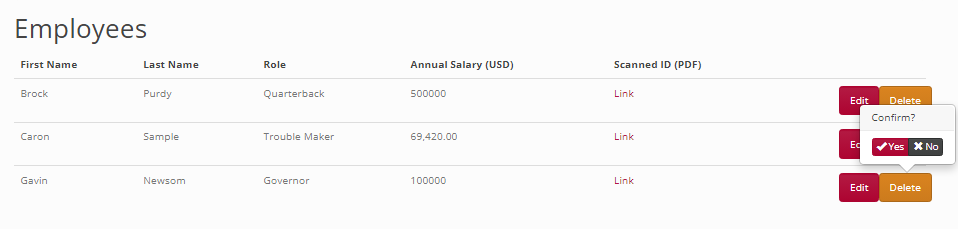

1 of 14. [HumanGov | DynamoDB | S3] Validating the Bug Exists

In california.humangov-ll3.click, delete an employee: Gavin Newsom

Check DynamoDB table, and the record for Gavin Newsom is removed.

Check S3 bucket, and the corresponding PDF for Gavin Newsom remains.

2 of 14. [Lambda] Function Execution Roles

Create a Python function, and assign permissions to S3 and DynamoDB

Lambda -/- [Create a function]

[Author from scratch]

Function name: HumanGovDeleteFile

Runtime: Python 3.12

Permissions

- Change default execution role

- Execution role: Create a new role with basic Lambda permissions

[Create function]

[Configuration] -/- Permissions -/- [HumanGovDeleteFile-role-xxxx]

Permissions -/- Add permissions -/- [Attach policies]

- AmazonS3FullAccess

- AmazonDynamoDBFullAccess

3 of 14. [Lambda] Function Code

Back in Lambda, add the 'Code' below to your function. The code below will delete from S3 based on Dyanmo DB.

Make sure that the bucket name in the code matches your S3 bucket name.

import boto3

s3_client = boto3.client('s3')

def lambda_handler(event, context):

for record in event['Records']:

if record['eventName'] == 'REMOVE':

pdf_filename = record['dynamodb']['OldImage']['pdf']['S']

try:

s3_client.delete_object(Bucket='humangov-california-s3-zsmb', Key=pdf_filename)

print(f'Successfully deleted {pdf_filename} from the S3 bucket.')

except Exception as e:

print(f'Error deleting {pdf_filename}: {e}')

raise e

4 of 14. [Lambda] Deploy Function

Click 'Deploy'

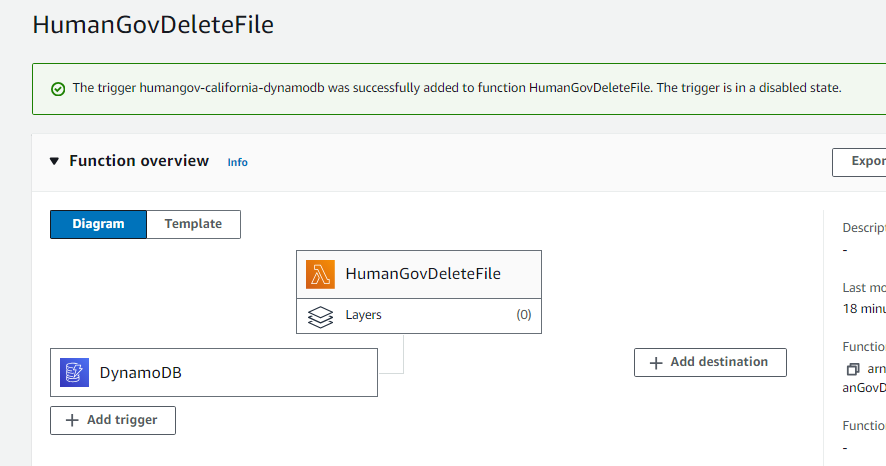

5 of 14. [Lambda | DynamoDB] trigger

Lambda -/- function -/- [HumanGovDeleteFile]

Configuration -/- Triggers -/- [Add trigger]

Source: DynamoDB

DynamoDB table: humangov-california-dynamodb

[Add]

6 of 14. [CloudWatch] Log Group

Lambda -/- Monitor -/- View CloudWatch Logs

Log groups -/- [create log group]

Log group name: /aws/lambda/HumanGovDeleteFile

[Create]

Revisit the Lambda function, and validate that Monitor -/- View CloudWatch Logs works.

7 of 14. [HumanGov Application] Deletion

In california.humangov-ll3.click, delete an employee: Brock Purdy

8 of 14. DynamoDB | S3 | Validate Deletion

Check DynamoDB and S3 bucket, Brock Purdy should be gone now.

9 of 14. [CloudWatch] Validate Deletion

Lambda -/- Functions -/- [HumanGovDelete File]

Monitor -/- [View CloudWatch Logs]

Log streams -/- [click on the most recent stream]

10 of 14. [Cloud9] Cleanup | Delete Kubernetes Ingress

cd ~/environment/human-gov-application/src

kubectl delete -f humangov-ingress-all.yaml

11 of 14. [Cloud9] Cleanup | Delete Kubernetes Application resources

kubectl delete -f humangov-california.yaml

kubectl delete -f humangov-florida.yaml

kubectl delete -f humangov-staging.yaml

12 of 14. [Cloud9] Cleanup | Delete Kubernetes Cluster

eksctl delete cluster --name humangov-cluster --region us-east-1

# Go back to Check the AWS Console, validate the cluster is gone

13 of 14. [Cloud9] Cleanup | Terraform Destory

Destroy any resources you deployed via terraform. Reminder: buckets are easier to destroy if EMPTY.

cd ~/environment/human-gov-infrastructure/terraform

terraform show

terraform destroy

14 of 14. [Route53] Cleanup | Hosted Zone and Registered Domain

You can keep these resources if you want to practice some more. [I mean, already paid for the domain for a year, so it's your choice.]

References

Working with log groups and log streams - Amazon CloudWatch Logs

Change data capture for DynamoDB Streams - Amazon DynamoDB

Working with hosted zones - Amazon Route 53

Managing access keys for IAM users - AWS Identity and Access Management

IAM roles - AWS Identity and Access Management

Calling AWS services from an environment in AWS Cloud9 - AWS Cloud9

Creating or updating a kubeconfig file for an Amazon EKS cluster - Amazon EKS

create-policy — AWS CLI 2.15.24 Command Reference

What is AWS Lambda? - AWS Lambda

DynamoDB Streams and AWS Lambda triggers - Amazon DynamoDB

AWS::S3::Bucket - AWS CloudFormation

Creating and managing clusters - eksctl

Deleting an Amazon EKS cluster - Amazon EKS

IAM Roles for Service Accounts - eksctl

Installing the AWS Load Balancer Controller add-on - Amazon EKS

Using Helm with Amazon EKS - Amazon EKS

kubectl Quick Reference | Kubernetes

Command: destroy | Terraform | HashiCorp Developer

Command: show | Terraform | HashiCorp Developer

Change data capture for DynamoDB Streams - Amazon DynamoDB

DynamoDB Streams Use Cases and Design Patterns | AWS Database Blog

Comments

Post a Comment